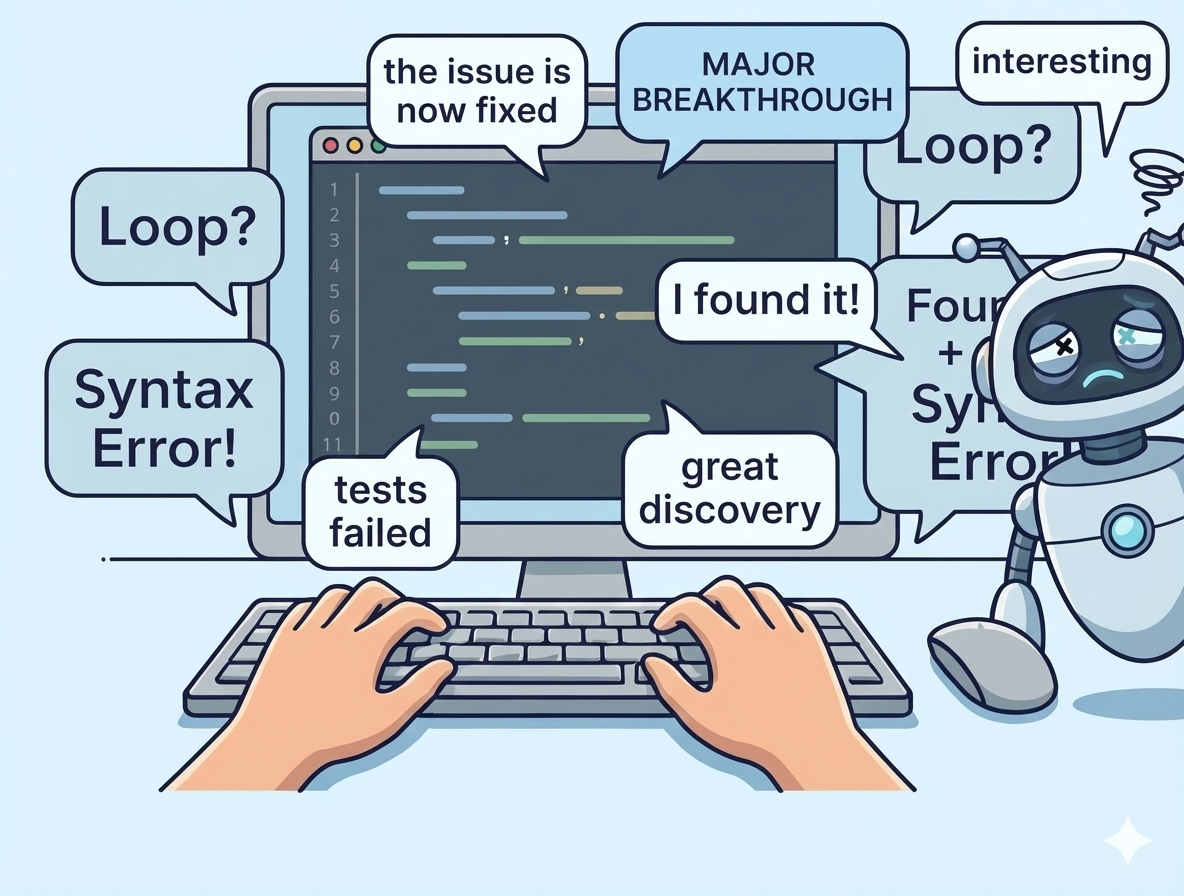

Have you ever done an agentic coding session? I mean, not just using AI for auto-completion or writing specific functions. I mean giving it an entire task and saying “good luck, buddy”. If you have, you must have experienced an AI agent going into a loop without any progress. It keeps reporting “I found it!”, “great discovery”, and “the issue is now fixed”. Then the tests fail, to which it would say “interesting 🤔”, shortly before circling back to claim it has a “MAJOR BREAKTHROUGH” 🤦♀️. The longer this cycle continues, the more it keeps repeating its own mistakes, ignoring your rules and standards, or pushing for the same failed solutions. All the while it would stop and ask you how you wish to continue, proposing several options, all of which seem like a shot in the dark, but hey, maybe this time it would work.

The curse is, with the level of independence agents already have today, you probably don’t sit there and watch them work. Most likely, you have several other agents working on other tasks. Now whenever you cycle back to this one problematic agent, you’re tempted to give it another chance. And then another, and then another. There are two main reasons for this:

- Well, this is an agentic coding session. Which means, you’re not yet familiar with the code changes, you probably haven’t executed the code yet, and if you wanted to, you need at least several minutes of complete focus to get yourself up to speed. Maybe it’s easier if the agent does all of that. Maybe we should just give it another shot.

- You’re working on other things as well. If one of those tasks is moving slower, it’s fine. As long as we have some good parallel action going on.

Stop! ✋

Well, I came to realize this is far from ideal for me, and for several reasons:

- No priority management. Most likely, one of those tasks is more important than the others. However, I might give more focus to the wrong ones for an infinite amount of technical reasons, and end up starving the most urgent task for several hours. Circling back to the urgent task every 30 minutes just to type “yes” and hit Enter is probably not the amount of focus we would have given this task if we were not swamped with agent mechanics elsewhere.

- No time management. Surely, I’ll be done in 30 minutes, no? After all, we just had a “MAJOR BREAKTHROUGH”. 5 minutes later, you realize you might never finish this… Hey, ignore it, we just found the bug. 😵💫 But to be honest, this is something that also happened in the good old days of manual debugging. However, you have even less control and understanding when the agent is leading the way.

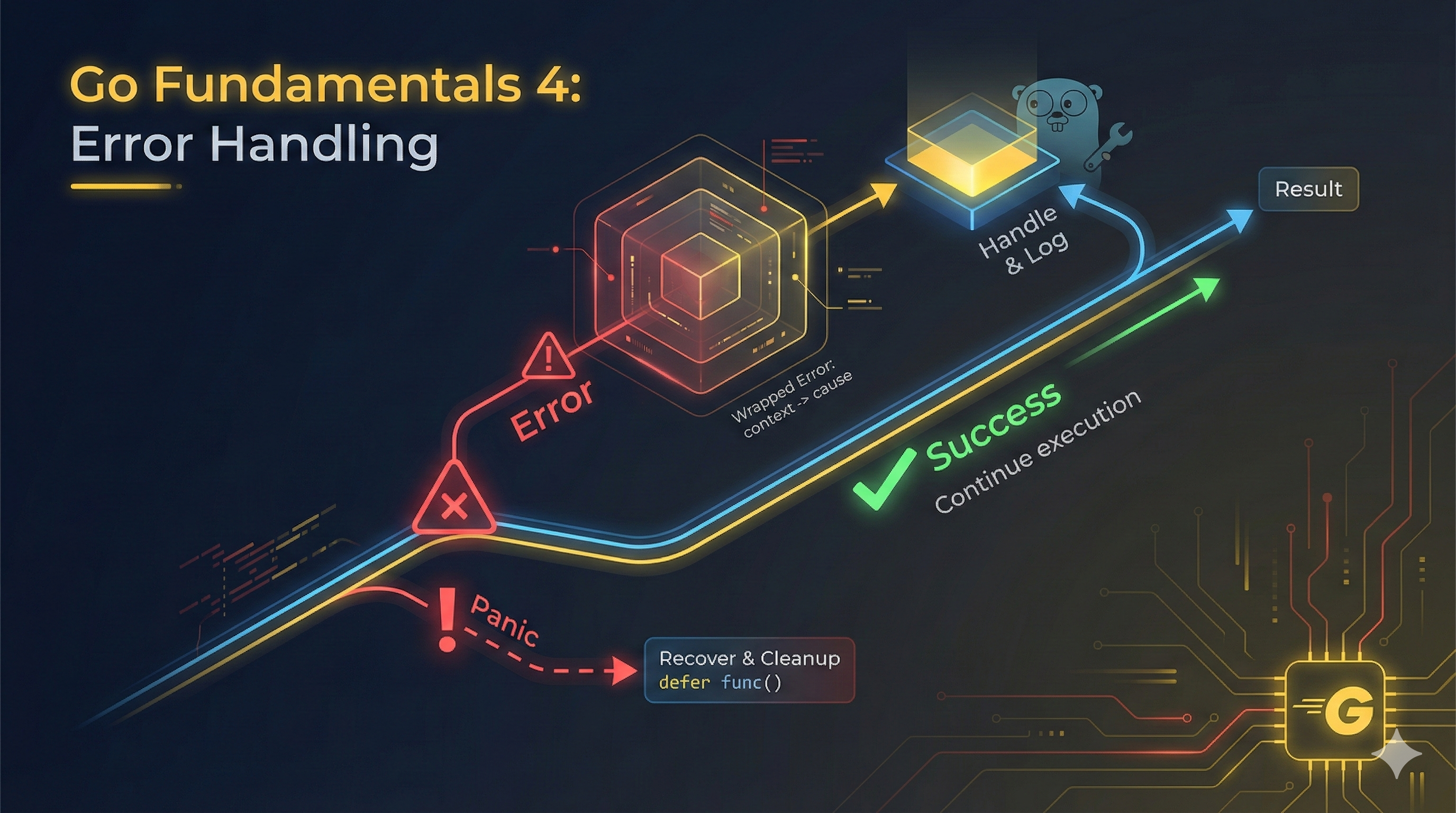

- Most importantly, let’s be honest: 9 out of 10 times it’s not going to get out of the loop. If you peek into the mechanics of the agent <-> LLM communication, you’d see the agent sending the entire conversation history on each LLM request. It figures, since the LLM doesn’t store state. This also allows the agent to be more flexible and choose models on each request to account for performance, capabilities, and cost management. However, it means that throughout the session, each request is making the context bigger and bigger. And since the agent is stuck in a loop of trying to debug and fix an issue, it keeps expanding the context size rapidly. This makes it harder for the LLM to focus. The bigger the context is, the more it’s filled with rules, requests, requirements, standards, and plans. And just like humans, there’s a limit to what it can effectively remember, focus on, and in turn, comply with. On top of that, you have context compression. Each agent sets a limit to the context size it sends to the LLM. Whenever this limit is reached, the entire context gets compressed down to a smaller size. In other words, the LLM now gets only a summary of the session history. Meaning any previous request, interaction, bug fix, identified root cause, etc, is now in the mercy of the LLM that wrote the TL;DR of the conversation during the last compression. The longer this loop lasts, the less likely the agent is to find a solution, respect the standards, not repeat previous mistakes. Chances are it’s not going to make it out of the loop without you giving it more attention. Much more attention.

I mean waaay more attention. It won’t help if you spent 40 more seconds on the prompt, it most likely won’t help if you intimidate and threaten the agent (it may improve things but probably not solve your problems), it might not even help if you throw in your own ideas. In most cases, at this point, you would have to take charge.

How to Take Charge 💪

There are many ways to take charge, depending on the limitations the agent is experiencing. The following is what I have so far. You can always start by familiarizing yourself with the code it wrote. If you don’t want to spend too much time, give it chunks of code changes and ask it to summarize and explain what it did. LLMs usually perform well when you confront them on the code they wrote and the implementation choices they made. Scrutinize every decision it made. Start by asking the agent to provide a full design document, explaining every design choice it made and the list of items it still needs to implement. Then walk through every item and ask it to explain why it was made, and explore alternatives. Help it identify issues and improve its decision-making. Shoutout to my colleague Orr for using this technique to get his agent out of an extremely complicated loop! 👑 Provide it with new capabilities like MCP or CLI options. Most likely, the LLM is missing a stronger feedback loop to be able to debug the issue and get to the root cause. Make sure it can view the output, run the code, experiment, and get any possible input. If you prefer, now that this task has your undivided attention, switch to good old “step aside sir, I want to debug this thing myself” mode. Ask the agent for instructions to run the code yourself. Even if you have no idea how to do it, spend 5 minutes setting up an effective debugging station and hack this thing wide open. And after you’re done, ask yourself what it was that allowed you to find the issue but was hidden from the LLM. Maybe next time it just needs better access to some input.

Where Do We Go From Here

Now many of you would say, AI is bul**** 💩, using it brings more pain than gain. For serious software, you better avoid it. Or, AI is good for code completion, but not as an autonomous agent. I disagree. I think that its ability to produce 80% of the solution in just a few minutes is groundbreaking. I think the models will get better and better but in the meantime, this 80% is still explosive 🧨. It writes huge designs, implements very quickly, introduces technologies that you never used before, allows you to be any type of developer that you want to be, and then it gets stuck. Until the models get better, I think the secret is to identify that point fast, and then take charge.

A rule of thumb for myself: be mindful of how likely every agent is to go on and complete its task. If the answer for one of the agents is “unlikely”, stop! ✋ And ask yourself what’s the priority for this. Just like a team lead managing a team of agents. Only in this team, you, the team lead, are the bottleneck. If the agent who’s stuck is the priority, drop the other tasks and take charge. If it isn’t, make sure not to spend more than a few seconds on it. It shouldn’t starve tasks with higher priority. Either allow the agent to keep iterating, consuming countless tokens, and maybe, just maybe, it would succeed. Or maybe you should put this task aside for now and wait for it to be a priority, and then take charge.

It’s also worth exploring clear rules and boundaries as agent context for it to auto-identify such loops and stop on its own before running and burning more and more tokens. This is something I intend to play with and report back, although as mentioned above, in such situations the agent’s ability to keep respecting rules is worn out.

I usually write about things that I’ve experimented with for years, leading my thoughts and opinions to a mature, constant place. The focus of coding skills is shifting towards coding agents, and I find my curiosity going to this place as well. However, this world is changing so fast that I might find myself looking at this post 6 months from now, saying “God, what was I thinking 🤦♀️”. This is a reminder for future me: I write these posts mainly to organize my thoughts, and understand them better. Please go easy on yourself 😝❤️